Discover a Delicious Way to Use Delta Lake! Yummy Delta - #1 Introduction

04 Mar 2023

Delta lake is an open source storage framework for building lake house architectures on top of data lakes.

Additionally it brings reliability to data lakes with features like: ACID transactions, scalable metadata handling, schema enforcement, time travel and many more.

Before you will continue reading please watch short introduction:

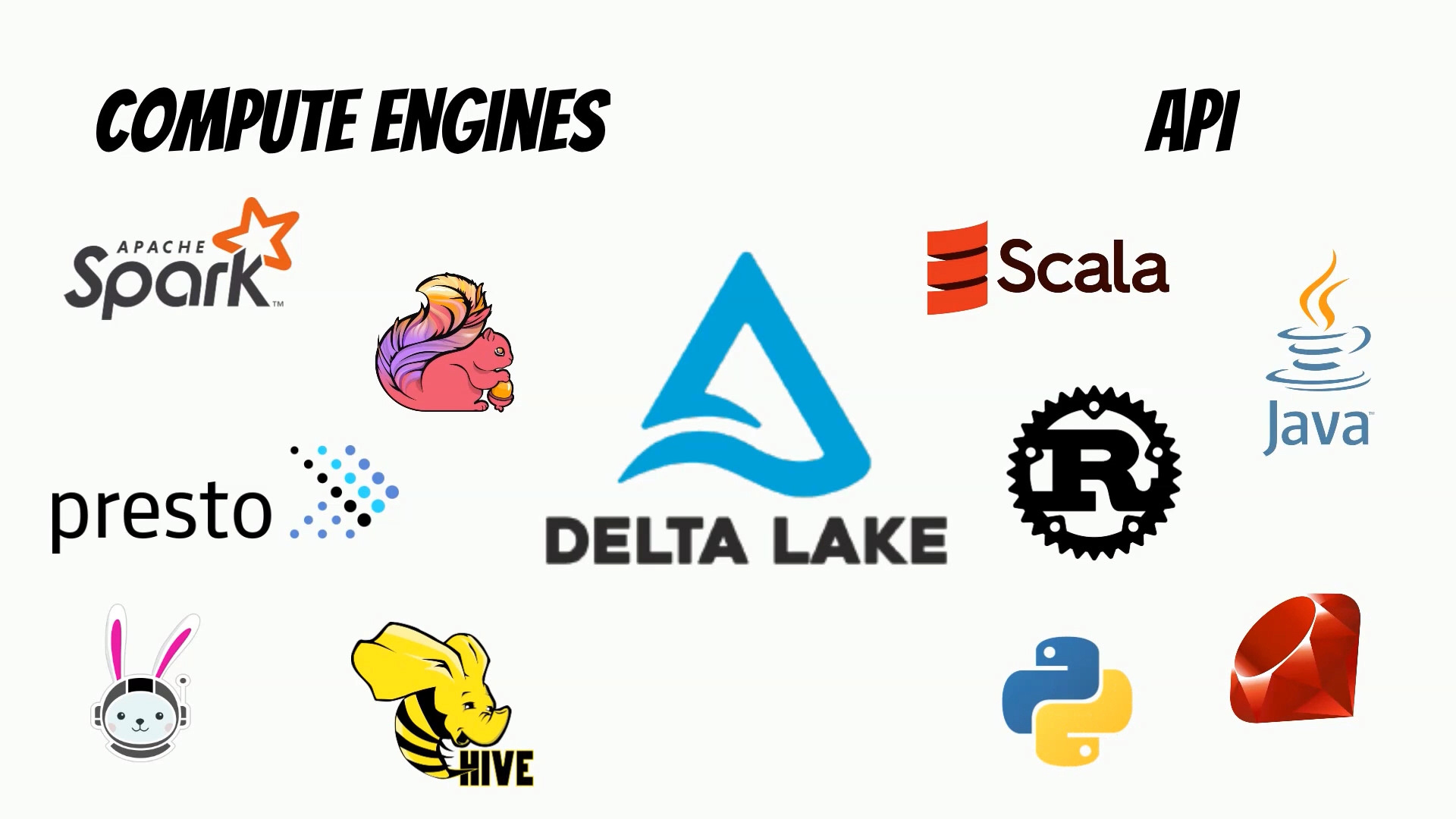

Delta lake can be used with compute engines like Spark, Flink, Presto, Trino and Hive. It also has API for Scala, Java, Rust , Ruby and Python.

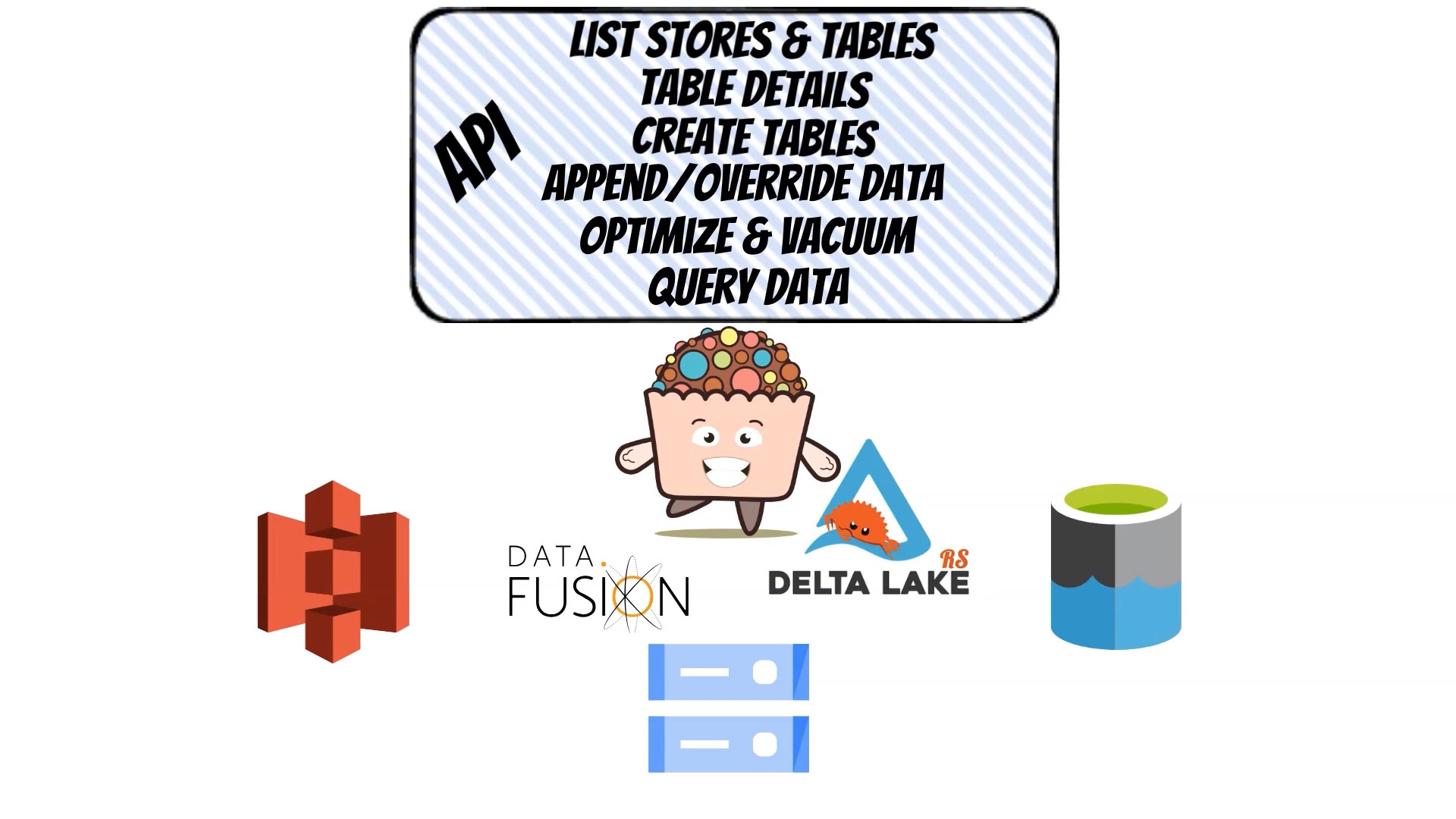

To simplify integrations with delta lake I have built a REST API layer called Yummy Delta.

Which abstracts multiple delta lake tables providing operations like: creating new delta table, writing and querying, but also optimizing and vacuuming. I have coded an overall solution in rust based on the delta-rs project.

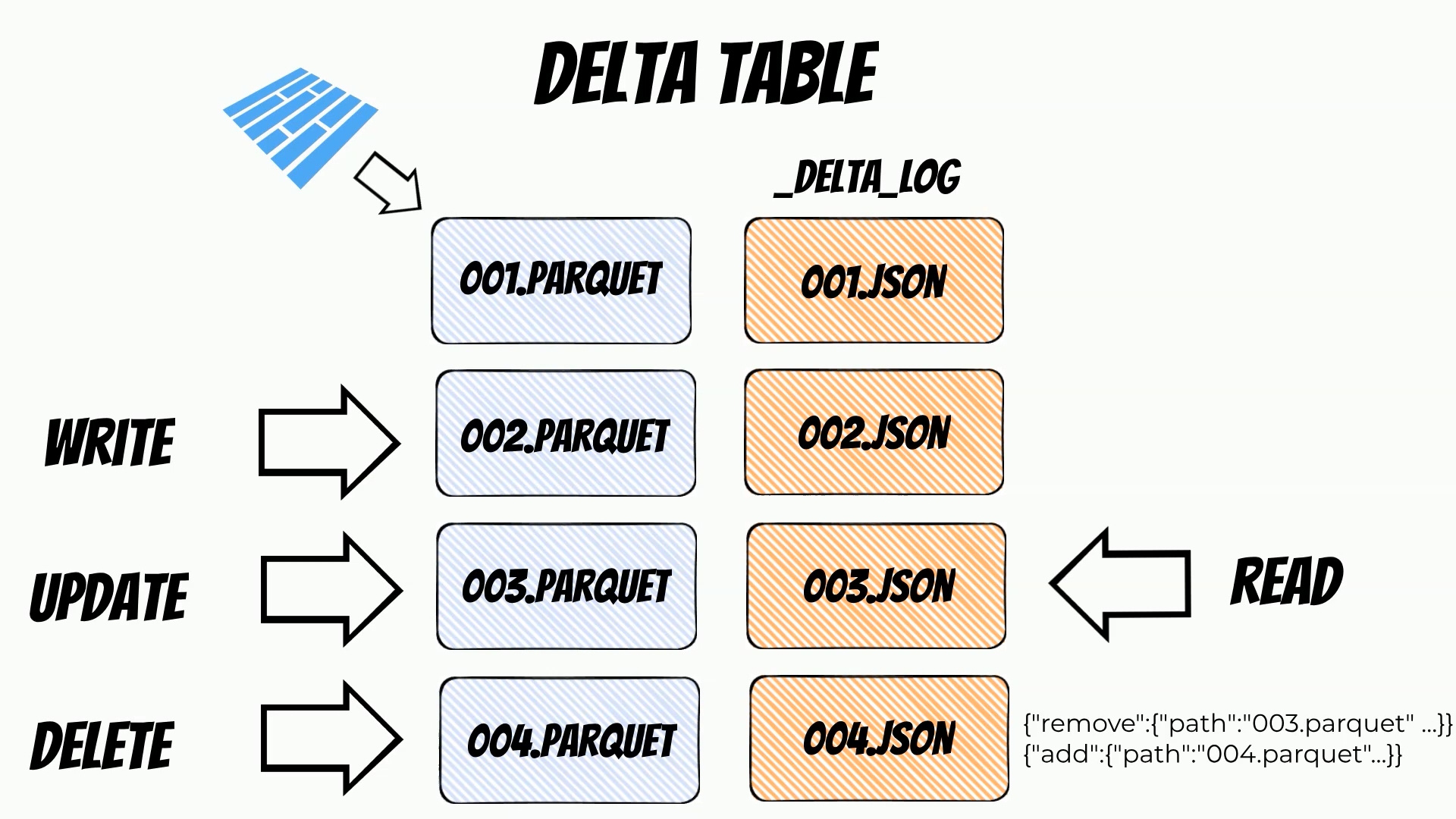

Delta lake keeps the data in parquet files which is an open source, column-oriented data file format.

Additionally it writes the metadata in the transaction log, json files containing information about all performed operations.

The transaction log is stored in the delta lake _delta_log subdirectory.

For example, every data write will create a new parquet file. After data write is done a new transaction log file will be created which finishes the transaction. Update and delete operations will be conducted in a similar way. On the other hand when we read data from delta lake at the first stage transaction files are read and then according to the transaction data appropriate parquet files are loaded.

Thanks to this mechanism the delta lake guarantees ACID transactions.

There are several delta lake integrations and one of them is delta-rs rust library.

Currently in delta-rs implementation we can use multiple storage backends including: Local filesystem, AWS S3, Azure Blob Storage and Azure Deltalake Storage Gen 2 and also Google Cloud Storage.

To be able to manage multiple delta tables on multiple stores I have built Yummy delta server which expose Rest API.

Using API we can: list and create delta tables, inspect delta tables schema, append or override data in delta tables and additional operations like optimize or vacuum.

You can find API reference here: https://www.yummyml.com/delta

Moreover we can query data using Data Fusion sql-s. Query results will be returned as a stream thus we can process it in batches.

You can simply install Yummy delta as a python package:

pip3 install yummy[delta]

Then we need to prepare config file:

stores:

- name: local

path: "/tmp/delta-test-1/"

- name: az

path: "az://delta-test-1/"

And you are ready run server using command line:

yummy delta server -h 0.0.0.0 -p 8080 -f config.yaml

Now we are able to perform all operations using the REST API.