Toxicless texts with AI – how to measure text toxicity in the browser

03 Oct 2021

In this article I will show how to measure comments toxicity using Machine Learning models.

Before you will continue reading please watch short introduction:

Hate, rude and toxic comments are common problem in the internet which affects many people. Today, we will prepare neural network, which detects comments toxicity, directly in the browser. The goal is to create solution which will detect toxicity in the realtime and warn the user during writing, which can discourage from writing toxic comments.

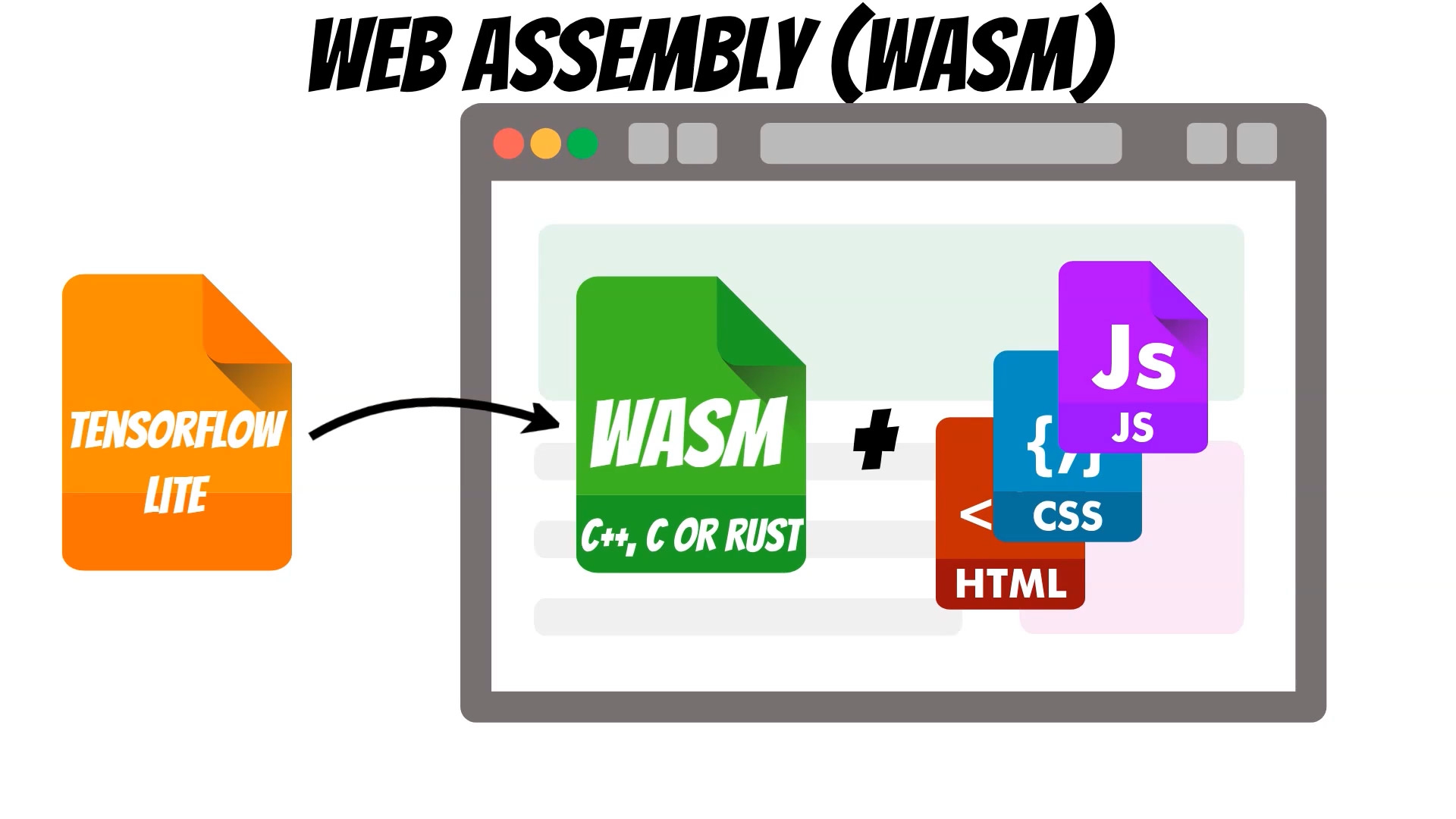

To do this, we will train the tensorflow lite model, which will run in the browser using WebAssembly backend. The WebAssembly (WASM) allows running C, C++ or RUST code at native speed. Thanks to this, prediction performance will be better than running it using javascript tensorflowjs version. Moreover, we can serve the model, on the static page, with no additional backend servers required.

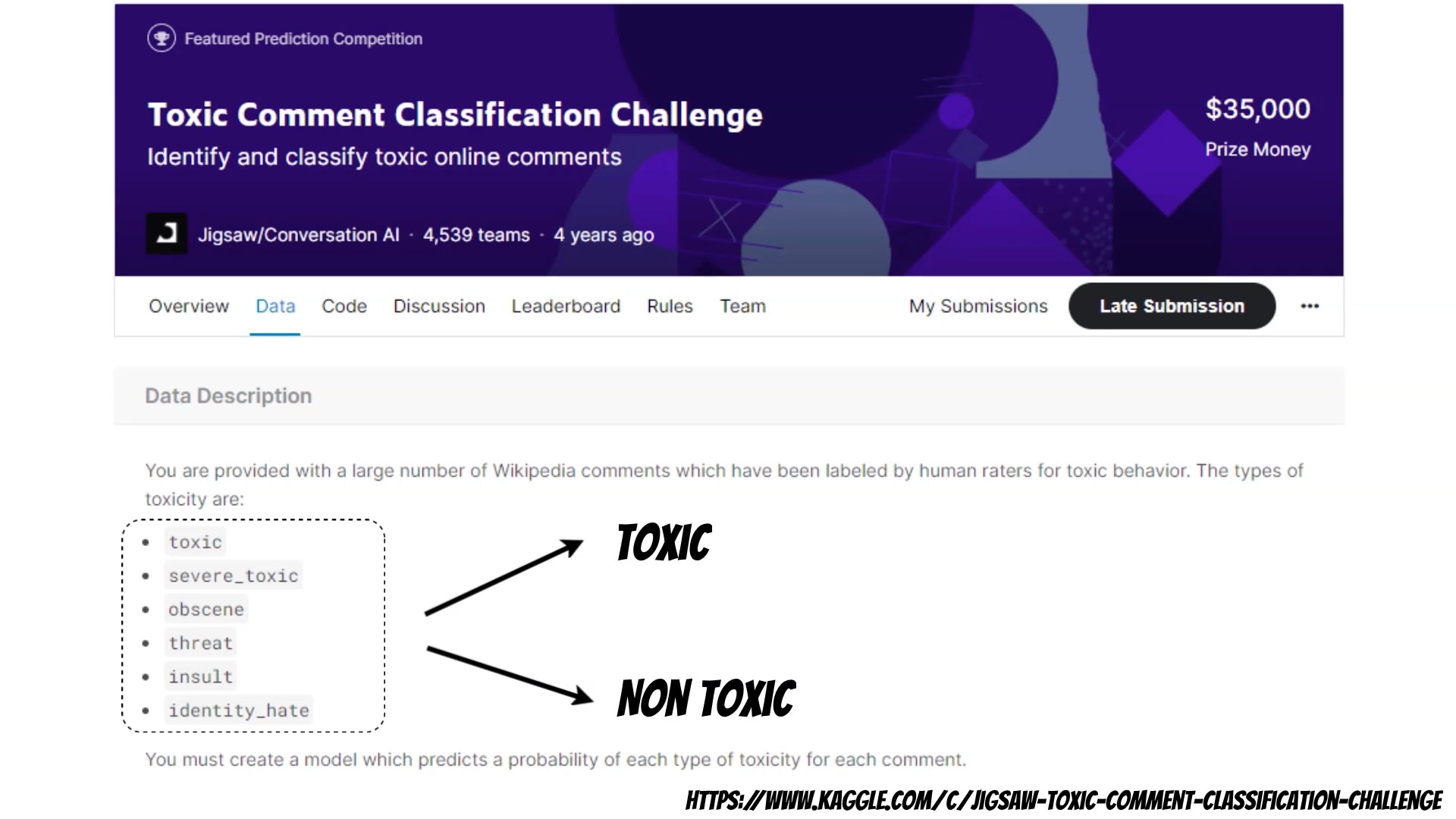

To train the model, we will use the Kaggle Toxic Comment Classification Challenge training data, which contains the labeled comments, with toxicity types:

- toxic

- severe_toxic

- obscene

- threat

- insult

- identity_hate

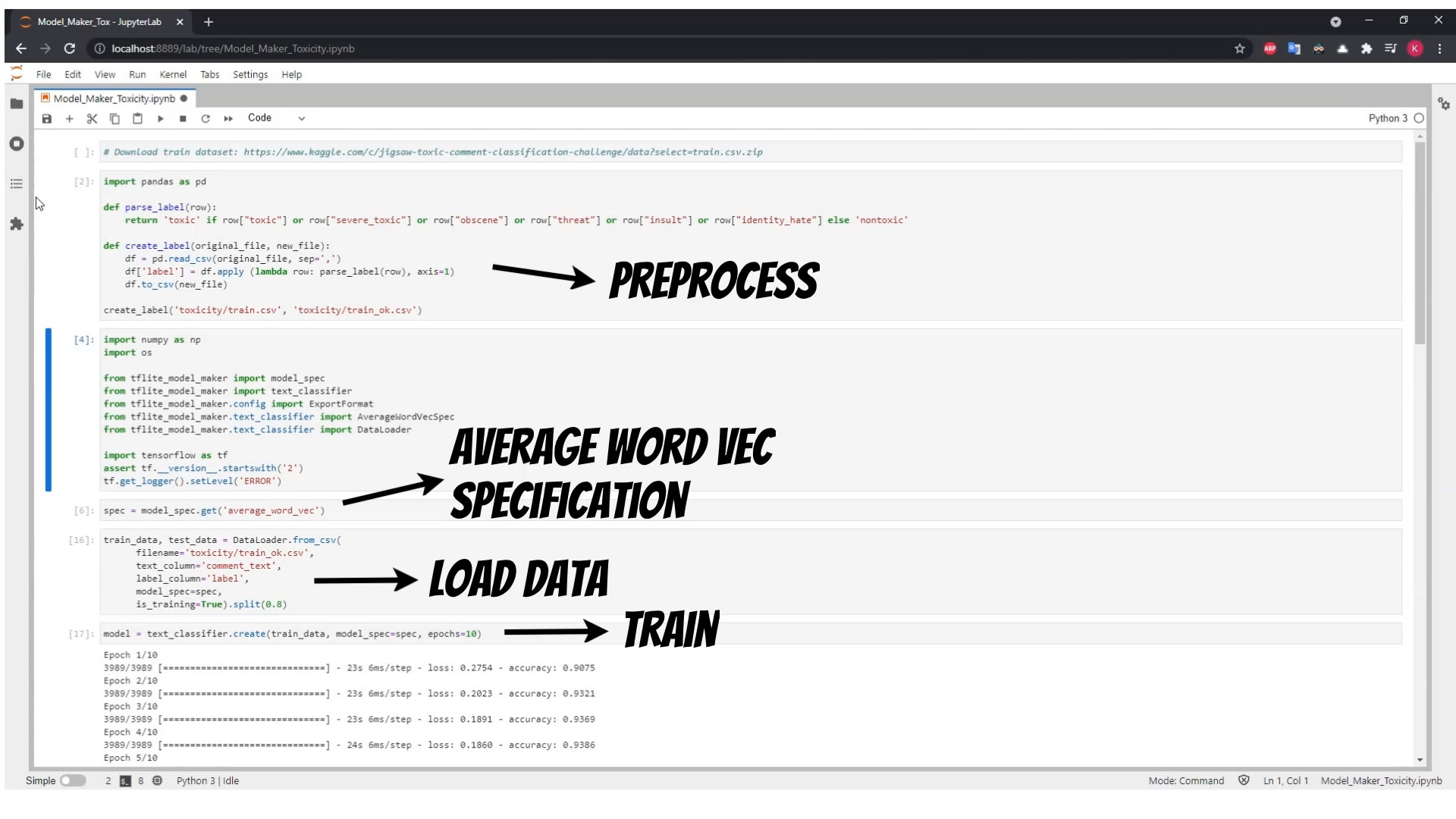

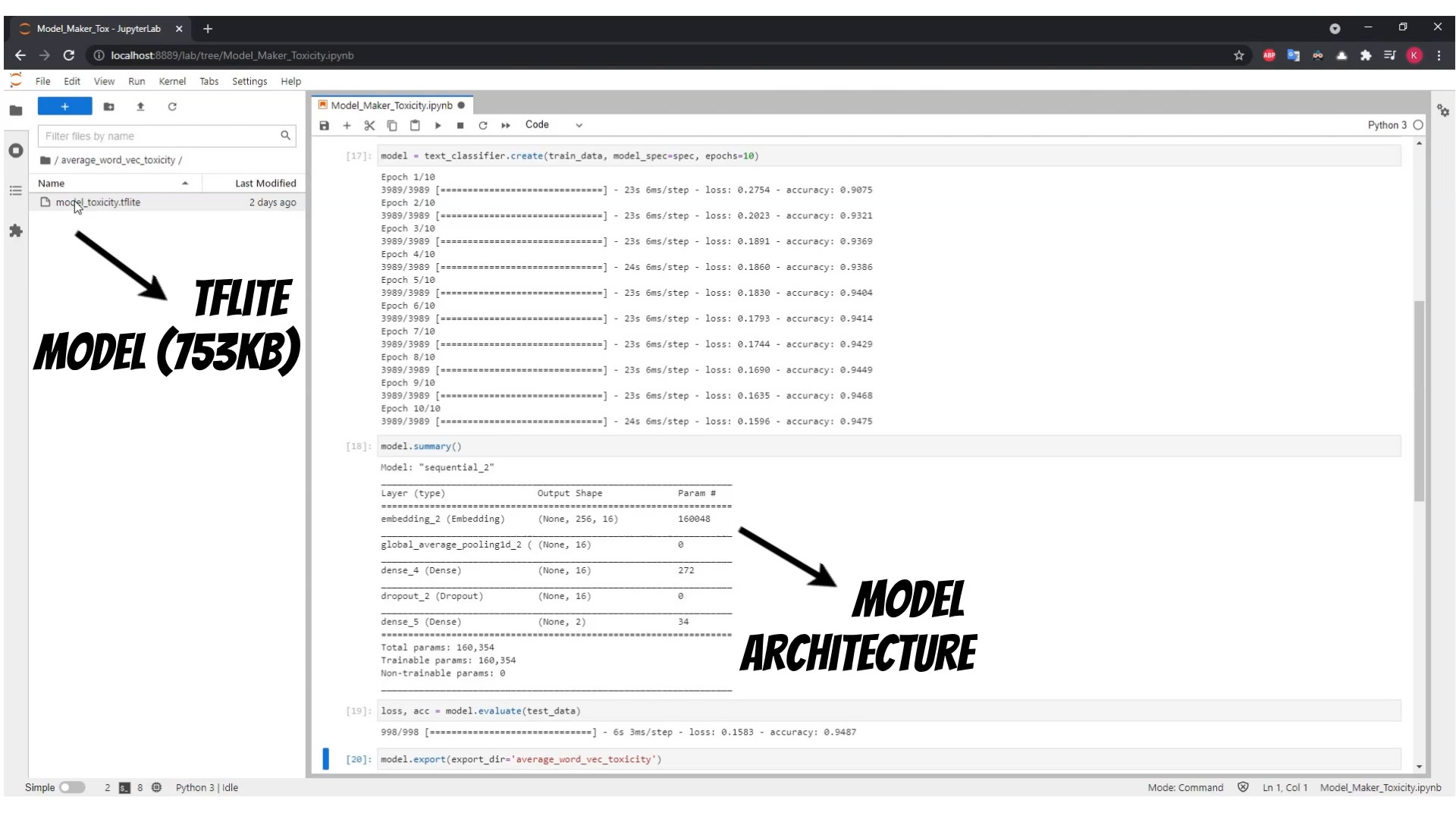

Our model, will only classify, if the text is toxic, or not. Thus we need to start with preprocessing training data. Then we will use the tensorflow lite model maker library.

We will also use the Averaging Word Embedding specification which will create words embeddings and dictionary mappings using training data thus we can train the model in the different languages.

The Averaging Word Embedding specification based model will be small <1MB.

If we have small dataset we can use the pretrained embeddings. We can choose MobileBERT or BERT-Base specification.

In this case models will much more bigger 25MB w/ quantization 100MB w/o quantization for

MobileBERT and 300MB for BERT-Base (based on tutorial )

Using simple model architecture (Averaging Word Embedding), we can achieve about nighty five percent accuracy, and small model size, appropriate for the web browser, and web assembly.

Now, let’s prepare the non-toxic forum web application, where we can write the comments. When we write non-toxic comments, the model won’t block it.

On the other hand, the toxic comments will be blocked, and the user warned.

Of course, this is only client side validation, which can discourage users, from writing toxic comments.

To run the example simply clone git repository and run simple server to serve the static page:

git clone https://github.com/qooba/ai-toxicless-texts.git

cd ai-toxicless-texts

python3 -m http.server

The code to for preparing data, training and exporting model is here: https://github.com/qooba/ai-toxicless-texts/blob/master/Model_Maker_Toxicity.ipynb